Google kicked off its annual I/O developer conference at Shoreline Amphitheater in Mountain View, California. Here are some of the biggest

announcements from the Day 1 keynote. There will be more to come over the next couple of days, so follow along on everything Google I/O on TechCrunch.

Just before the keynote, Google announced it is rebranding its Google Research division to Google AI. The move signals how Google has increasingly focused R&D on computer vision, natural language processing, and neural networks.

What Google announced: Google announced a “continued conversation” update to Google Assistant that makes talking to the Assistant feel more natural. Now, instead of having to say “Hey Google” or “OK Google” every time you want to say a command, you’ll only have to do so the first time. The company also is adding a new feature that allows you to ask multiple questions within the same request. All this will roll out in the coming weeks.

Why it’s important: When you’re having a typical conversation, odds are you are asking follow-up questions if you didn’t get the answer you wanted. But it can be jarring to have to say “Hey Google” every single time, and it breaks the whole flow and makes the process feel pretty unnatural. If Google wants to be a significant player when it comes to voice interfaces, the actual interaction has to feel like a conversation — not just a series of queries.

What Google announced: Google Photos already makes it easy for you to correct photos with built-in editing tools and AI-powered features for automatically creating collages, movies and stylized photos. Now, Photos is getting more AI-powered fixes like B&W photo colorization, brightness correction and suggested rotations. A new version of the Google Photos app will suggest quick fixes and tweaks like rotations, brightness corrections or adding pops of color.

Why it’s important: Google is working to become a hub for all of your photos, and it’s able to woo potential users by offering powerful tools to edit, sort, and modify those photos. Each additional photo Google gets offers it more data and helps them get better and better at image recognition, which in the end not only improves the user experience for Google, but also makes its own tools for its services better. Google, at its heart, is a search company — and it needs a lot of data to get visual search right.

What Google announced: Smart Displays were the talk of Google’s CES push this year, but we haven’t heard much about Google’s Echo Show competitor since. At I/O, we got a little more insight into the company’s smart display efforts. Google’s first Smart Displays will launch in July, and of course will be powered by Google Assistant and YouTube . It’s clear that the company’s invested some resources into building a visual-first version of Assistant, justifying the addition of a screen to the experience.

Why it’s important: Users are increasingly getting accustomed to the idea of some smart device sitting in their living room that will answer their questions. But Google is looking to create a system where a user can ask questions and then have an option to have some kind of visual display for actions that just can’t be resolved with a voice interface. Google Assistant handles the voice part of that equation — and having YouTube is a good service that goes alongside that.

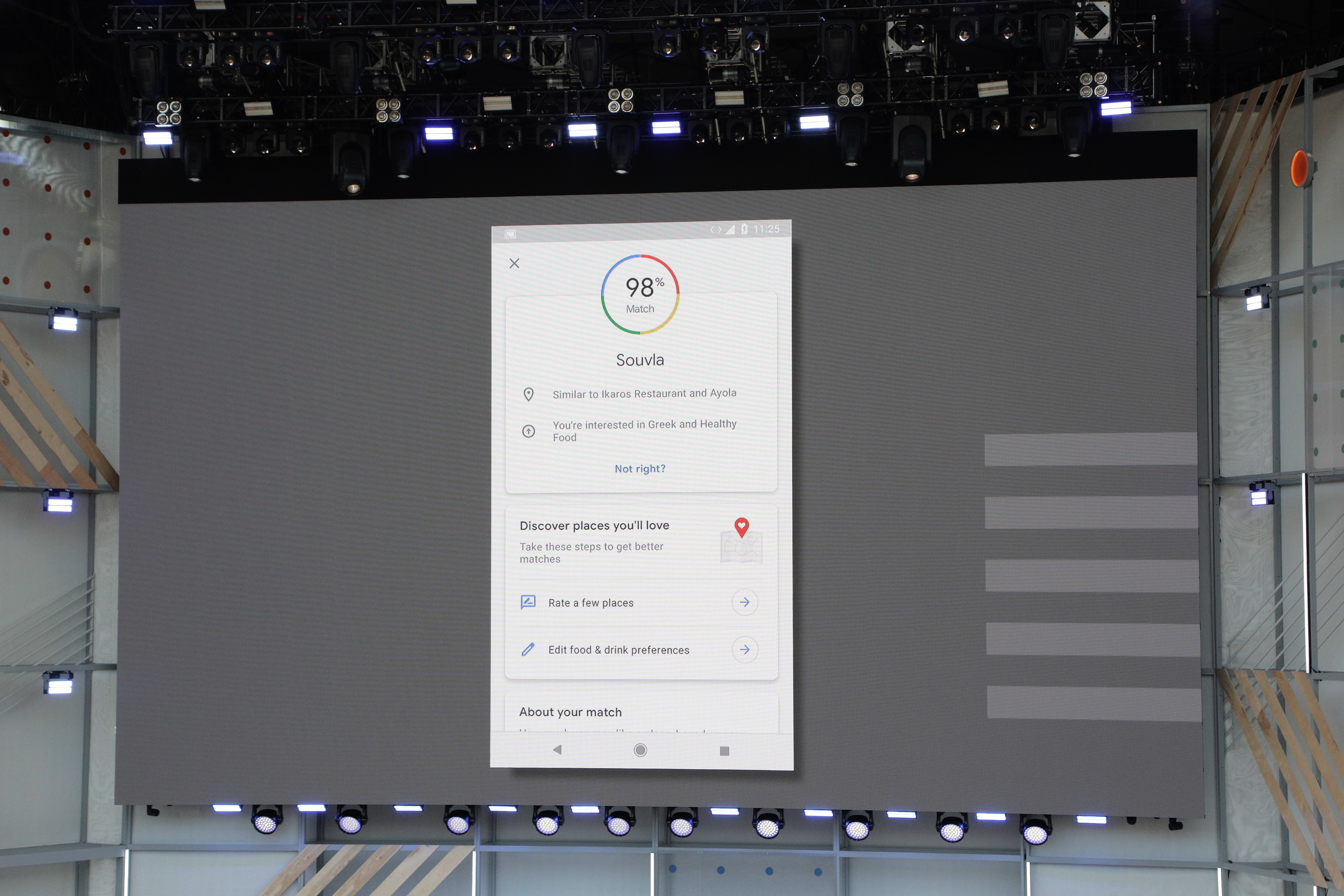

What Google announced: Google Assistant is coming to Google Maps, available on iOS and Android this summer. The addition is meant to provide better recommendations to users. Google has long worked to make Maps seem more personalized, but since Maps is now about far more than just directions, the company is introducing new features to give you better recommendations for local places.

The maps integration also combines the camera, computer vision technology, and Google Maps with Street View. With the camera/Maps combination, it really looks like you’ve jumped inside Street View. Google Lens can do things like identify buildings, or even dog breeds, just by pointing your camera at the object in question. It will also be able to identify text.

Why it’s important: Maps is one of Google’s biggest and most important products. There’s a lot of excitement around augmented reality — you can point to phenomena like Pokémon Go — and companies are just starting to scratch the surface of the best use cases for it. Figuring out directions seems like such a natural use case for a camera, and while it was a bit of a technical feat, it gives Google yet another perk for its Maps users to keep them inside the service and not switch over to alternatives. Again, with Google, everything comes back to the data, and it’s able to capture more data if users stick around in its apps.

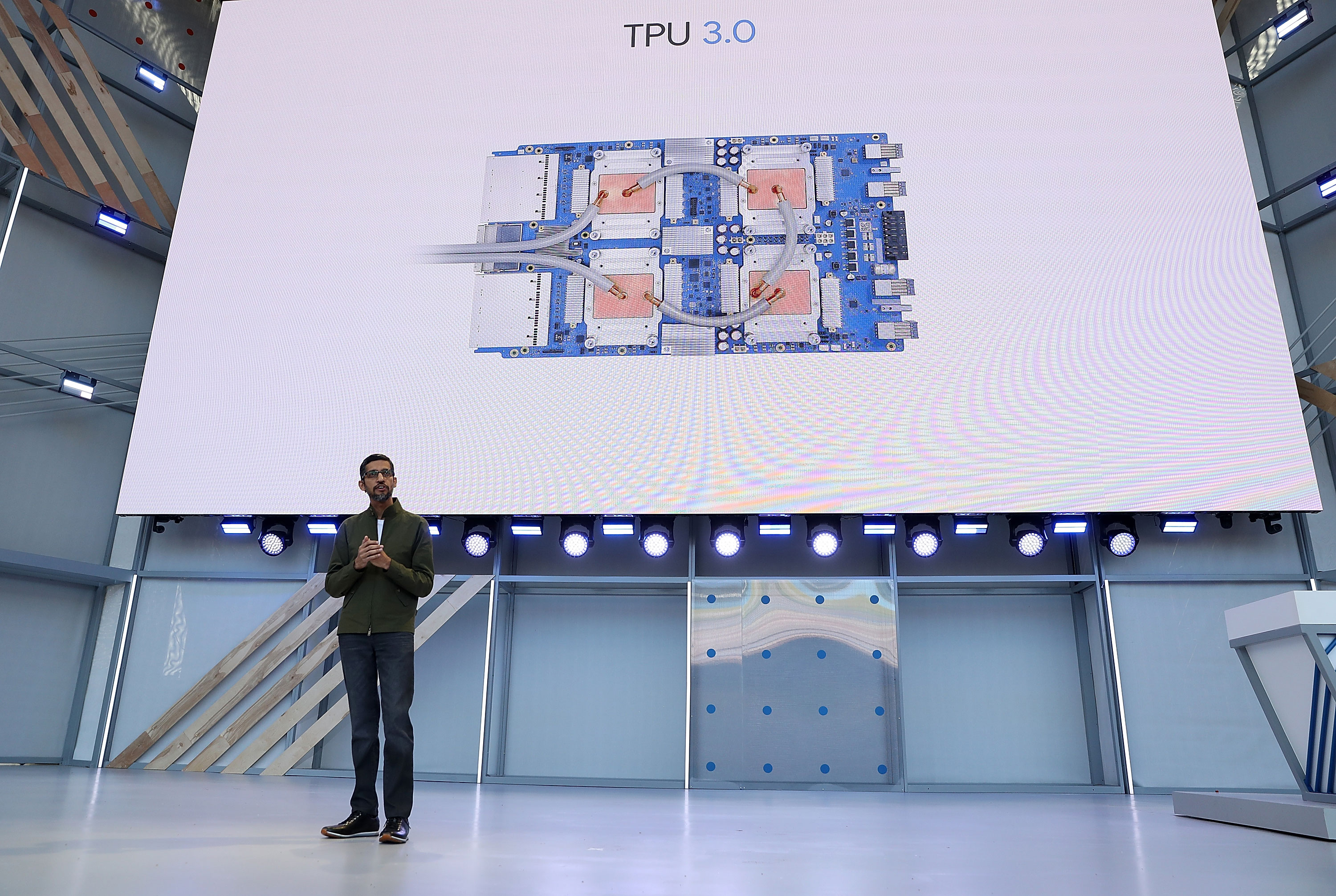

What Google announced: As the war for creating customized AI hardware heats up, Google said that it is rolling out its third generation of silicon, the Tensor Processor Unit 3.0. Google CEO Sundar Pichai said the new TPU is 8x more powerful than last year per pod, with up to 100 petaflops in performance. Google joins pretty much every other major company in looking to create custom silicon in order to handle its machine operations.

Why it’s important: There’s a race to create the best machine learning tools for developers. Whether that’s at the framework level with tools like TensorFlow or PyTorch or at the actual hardware level, the company that’s able to lock developers into its ecosystem will have an advantage over the its competitors. It’s especially important as Google looks to build its cloud platform, GCP, into a massive business while going up against Amazon’s AWS and Microsoft Azure. Giving developers — who are already adopting TensorFlow en masse — a way to speed up their operations can help Google continue to woo them into Google’s ecosystem.

MOUNTAIN VIEW, CA – MAY 08: Google CEO Sundar Pichai delivers the keynote address at the Google I/O 2018 Conference at Shoreline Amphitheater on May 8, 2018 in Mountain View, California. Google’s two day developer conference runs through Wednesday May 9. (Photo by Justin Sullivan/Getty Images)

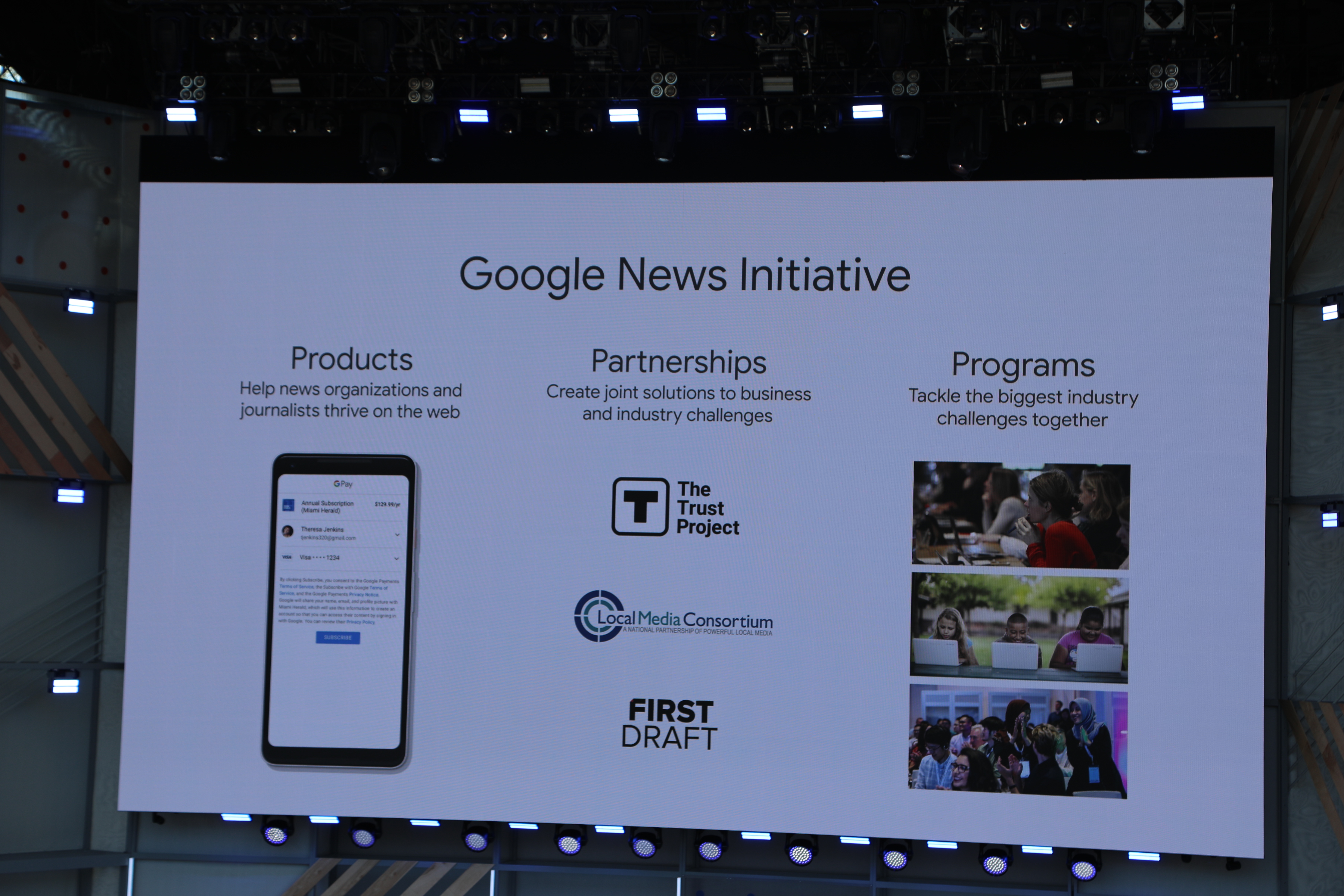

What Google announced: Watch out, Facebook . Google is also planning to leverage AI in a revamped version of Google News. The AI-powered, redesigned news destination app will “allow users to keep up with the news they care about, understand the full story, and enjoy and support the publishers they trust.” It will leverage elements found in Google’s digital magazine app, Newsstand and YouTube, and introduces new features like “newscasts” and “full coverage” to help people get a summary or a more holistic view of a news story.

Why it’s important: Facebook’s main product is literally called “News Feed,” and it serves as a major source of information for a non-trivial portion of the planet. But Facebook is embroiled in a scandal over personal data of as many as 87 million users ending up in the hands of a political research firm, and there are a lot of questions over Facebook’s algorithms and whether they surface up legitimate information. That’s a huge hole that Google could exploit by offering a better news product and, once again, lock users into its ecosystem.

What Google announced: Google unveiled ML Kit, a new software development kit for app developers on iOS and Android that allows them to integrate pre-built, Google-provided machine learning models into apps. The models support text recognition, face detection, barcode scanning, image labeling and landmark recognition.

Why it’s important: Machine learning tools have enabled a new wave of use cases that include use cases built on top of image recognition or speech detection. But even though frameworks like TensorFlow have made it easier to build applications that tap those tools, it can still take a high level of expertise to get them off the ground and running. Developers often figure out the best use cases for new tools and devices, and development kits like ML Kit help lower the barrier to entry and give developers without a ton of expertise in machine learning a playground to start figuring out interesting use cases for those appliocations.

So when will you be able to actually play with all these new features? The Android P beta is available today, and you can find the upgrade here.

Comments

Post a Comment